Imagine you're working on a HUGE project. We're talking 100 MILLION users already using the app, and every day, thousands of new people are joining! Your job? Make sure nobody picks a username that's already taken. Sounds simple, but doing it well is trickier than you'd think! 🧩

Most beginners would write a simple database query like this:

If that was your first instinct too, don't worry! But hold on - I'm about to show you how to make this lookup MUCH faster, going from O(n) (slow) to O(1) (super fast). Let's dive in! ✨

Why The Simple Way Is Too Slow

Databases check data row by row, one after another. That's like checking names on a list by starting at the top and going down until you find a match (or reach the end). Before it can even start checking, it needs to load all that data into memory, which takes time too.

Let's say we have 24 million users - that's 24 million rows to check! And as more people join, our database gets bigger and bigger. If we have to check EVERY row EVERY time someone picks a username, our app will get slower and slower. We need a smarter approach!

Would Caching Help?

Caching is like having a cheat sheet of answers ready to go. It works great for information that:

- Doesn't change very often

- Gets looked up frequently

For example, imagine building a stock market app like Bloomberg. Stock prices change every second! If you cached that info, users would see outdated prices instead of real-time updates. Not good!

With millions of usernames that could change (people might delete accounts or change usernames), caching isn't reliable enough. We need something even faster!

What About Binary Search?

Binary search is one of the fastest search methods out there. It works like the number guessing game where you keep cutting the range in half: "Is it higher than 50? Yes? Then is it higher than 75?" and so on.

With 100 million records, binary search could find a result in just 27 steps! That's MUCH better than checking all 100 million one by one.

But there's a catch - binary search only works if the data is already sorted. Every time a new user joins, we'd need to re-sort ALL usernames, which would be super slow. Not a good solution either!

Bloom Filters: The Perfect Solution

A Bloom Filter is a special tool that can tell us, almost instantly:

- "This username DEFINITELY doesn't exist" (100% accurate)

- "This username MIGHT exist" (small chance of false positives)

It's incredibly space-efficient and blazing fast. Unlike regular data structures, it doesn't store the actual values, just a fingerprint of them.

You know how HashMaps and Sets can find things super fast because they use hashing? Bloom Filters use similar magic but use way less memory!

How Bloom Filters Work - The Simple Version

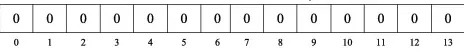

Imagine a big row of light switches, all turned OFF to start.

When a new username is created, the Bloom Filter:

- Uses a special formula (hash function) to decide which switch to flip

- Flips that switch to ON

The formula looks like this:

hash(username) % N = which switch to flip

Later, when someone wants to use a username, we use the same formula to check if that switch is ON:

- If the switch is OFF → Username is DEFINITELY free to use

- If the switch is ON → Username MIGHT be taken (better check the database to be sure)

Dealing With False Positives

What if two different usernames ("john" and "mark") get assigned to the same switch? That's called a "false positive" - our Bloom Filter might say "mark" is taken when it's actually free!

To reduce these mix-ups, we use MULTIPLE hash functions. Each username flips SEVERAL switches instead of just one. This makes our filter more accurate.

While we can never eliminate false positives completely, we can make them super rare by:

- Using more switches (bigger bit array)

- Using more hash functions

The math wizards among us can even calculate exactly how many of each we need to reach our desired accuracy level!

When To Use What

Remember: An O(n) approach isn't always bad, and an O(1) solution isn't always perfect. The key is knowing what YOUR system needs.

For username lookups in big systems with millions of users, Bloom Filters offer the perfect balance of speed and memory efficiency. They'll tell you instantly if a username is available, and only occasionally send you to the database for a double-check.

In a future post, I'll talk about cases where sometimes the slower approach actually works better than the faster one (sounds crazy, but it's true)!

If you enjoyed learning about Bloom Filters, share this post with your friends! Help them level up their knowledge too! 🚀

Bloom Filter Quiz

See how well you understood Bloom Filters

Question 1 of 6

What can a Bloom Filter tell you with 100% certainty?